FAN=Frontier of Artificial Networks

Bio

I am a tenure-track Assistant Professor in the Department of Data Science at the City University of Hong Kong. I studied my PhD in a small but lovely university Rensselaer Polytechnic Institute (RPI), US, where I feel blessed to be advised by Dr. Ge Wang and have so many wonderful friends. Prior to RPI, I studied my undergraduate at Harbin Institute of Technology, China.

My research interest is in NeuroAI and its applications in data science. I usually built models by drawing inspiration from neuroscience and then established theory for the developed neuro-inspired model. Besides my research, I am a big fan of math and physics. I am also operating a Blog over WeChat together with my friends. If you are interested in my research, please feel free to reach out (hitfanfenglei@gmail.com).

- Phone: +852 84919576

- City: Hong Kong

- Degree: PhD

- Email: hitfanfenglei@gmail.com

News

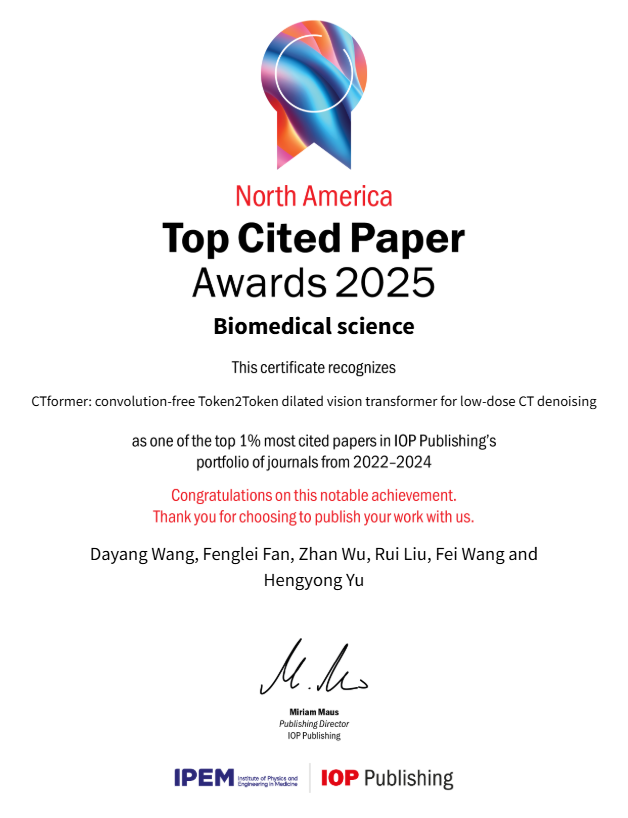

- Oct 22, 2025. My paper was top cited under IOP .

-

Sep 24, 2025. I was on Guangxi TV!

- Sep 15, 2025. Chat with Prof. Hanchuan Peng!

- Aug 3, 2025. Prof. Tao Luo from SJTU visited me and delivered a talk.

- July 17, 2025, Serve as an external expert for the disciplinary development of Guangxi Minzu University.

- July 3, 2025, I am glad to visit Longhua Precision Metrology Institute, Shenzhen!

- June 20, 2025. I organized a series AI seminar in our department. This seminar covers a wide range of topics such as computer vision, deep learning theory, robotics, and model compression

- April 27, 2025, Visit Institute of Advanced Study, Beijing Normal and Hong Kong Baptist University, Zhuhai, China

- March 31, 2025. Happy to deliver a talk at Changxin Memory Technologies, Hefei, China

- March 30, 2025. Visit Prof. Li Li in USTC, Hefei, China

- March 28, 2025. Happy to serve as a PhD thesis external examiner for Institute for Interdisciplinary Information Sciences, Tsinghua University.

- Feb 25, 2024. Our special issue Deployment of Large Models in Healthcare was released in IEEE TRPMS. Please consider submitting!

- Jan 15, 2024. Prof. Leo Yu Zhang from Griffith University, Australia, visited me

- Jan 14, 2024. Happy to serve as the Senior PC member for IJCAI2025.

- Jan 8, 2024. Prof. Tongliang Liu from the University of Sydney, Australia, visited me

- Dec 7, 2024. Prof. Huan Xiong from Harbin Institute of Technology visited me

- Nov 27, 2024. Coffee hour with some students in my class. This is a voluntary event open to every student. I also gained a lot from students' questions. Time has changed!

- Oct 29, 2024. Visit Dr. Yang Lyu, Mr. Xinzhen Zhang, and Dr. Zefan Lin at United Imaging which I admired for a long time.

- Oct 28, 2024. Visit Prof. Guohua Cao at ShanghaiTech. I learned a lot!

- Oct 8, 2024. Lunch with Dean Song. I learned a lot!

- Sep 14, 2024. RPI Aluminae Lunch with Drs. Ke Yang, Qingpeng Zhang, and Zuankai Wang

- Aug 24, 2024. I am happy to discuss with talents of *** in Songshan Lake, Dongguan!

- Aug 21, 2024. My collaborator Dr. Jianjun Wang (Deputy Chief Engineer of Guangxi Road&Bridge Group) visited me!

- July 25, 2024. Visit Drs. Rui and Deng in Paris!

- July 15, 2024. My old friends Drs. Yen-jen Cheng (National Pingtung University) and Chia-An Liu (Soochow University) visited me. It was so much fun to chat with them again on algebraic graph theory and group representation theory!

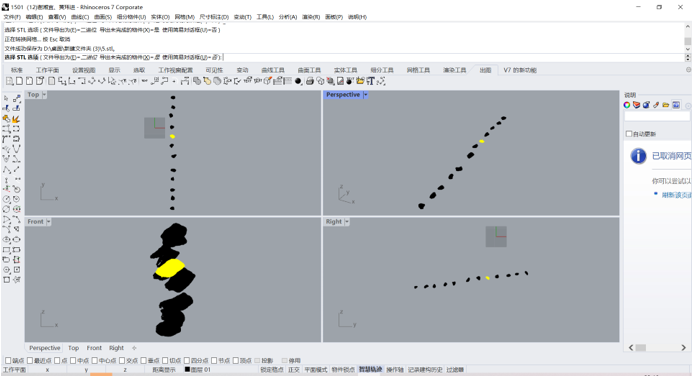

- July 1, 2024. Thank my mentee Jingbo Zhang! The concrete gradation project with Guangxi Road&Bridge Group was finished and obtained thump-up from experts in Guangxi. This is the first industrial project in my life!

- Nov 1, 2023. My mentee Zelin Dong won the Gold Medal in Undergraduate Research Opportunities Program (UROP), Department of Mathematics, The Chinese University of Hong Kong (CUHK)

Research Experience

- 2025.03 - present: Assistant Professor, Department of Data Science, City University of Hong Kong, HK

- 2022.12 - 2025.02: Research Assistant Professor, Department of Mathematics, The Chinese University of Hong Kong, HK

- 2021.9 - 2022.8: Postdoctoral Associate, Prof. Fei Wang’s Lab, Department of Computer Science, Cornell University, New York, NY, US

- 2020.1--2020.8: Research Intern, Dr. Dimitry Krotov’s Group, MIT-IBM Watson AI Lab, Cambridge, MA, US

- 2019.5 – 2019.8: Summer Intern, GE Global Research Center, Niskayuna, NY, US

- 2016.9 – 2017.6: Research Associate, Prof. Jian Liu’s Lab, School of Precise Instrument, Harbin Institute of Technology, Harbin, Heilongjiang, China

- 2016.6 – 2016.8: Visiting Student, Prof. Jean Michel Nunzi’s Lab, Department of Physics, Queen’s University, Kingston, Ontario, Canada

- 2015.1 – 2015.6: Visiting Student, Prof. Chin-Wen Weng’s Group, Department of Applied Mathematics, National Chiao Tung University, Hsinchu, Taiwan, China

Honors and Awards

- October 25, 2025: Our paper got top-cited award by IOP .

- June 25, 2024: I was selected as the recipient of 2024 OlympusMons Pioneering Award .

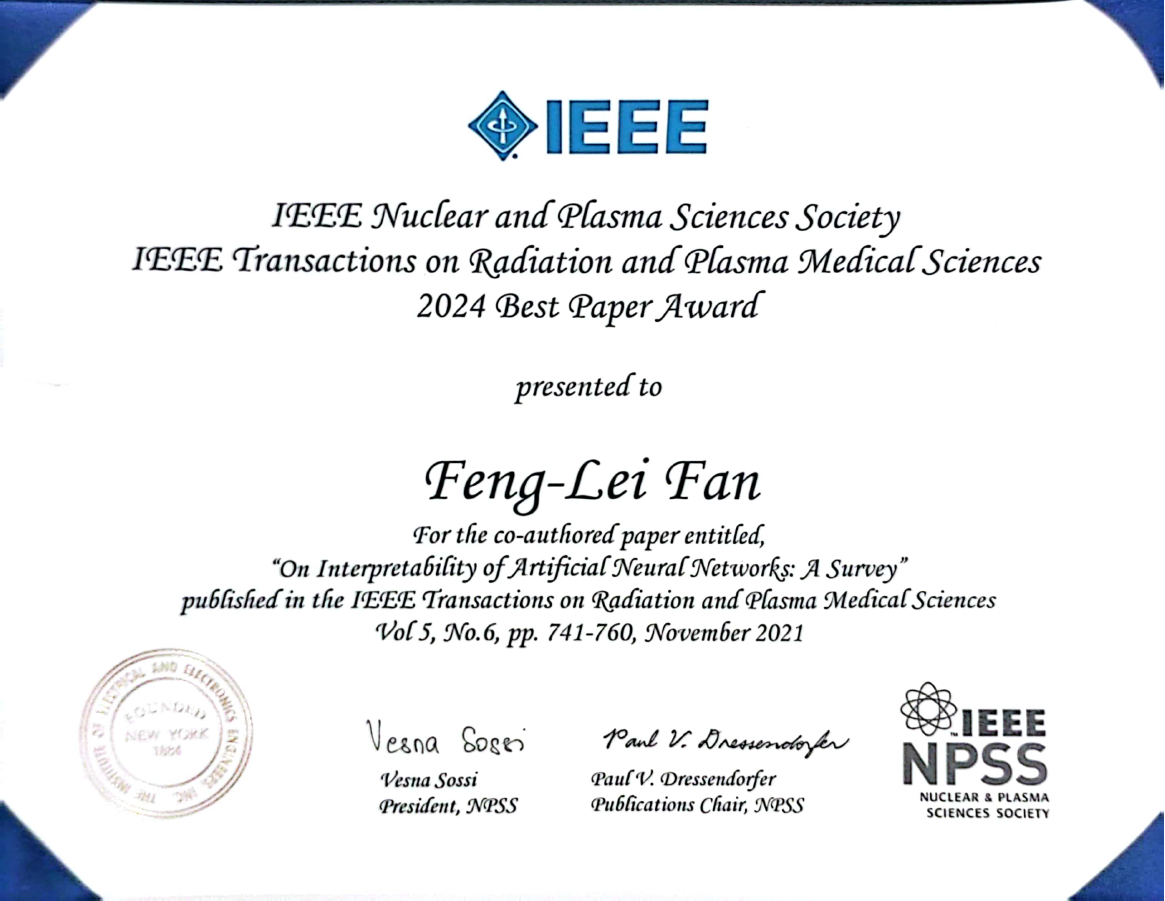

- July 18, 2024: Our paper was selected for the IEEE TRPMS Best Paper Award from the IEEE Nuclear and Plasma Society .

- May 26, 2024: Our paper was selected as one of few CVPR 2024 Best Paper Award candidates (24 out of 2W+ submissions).

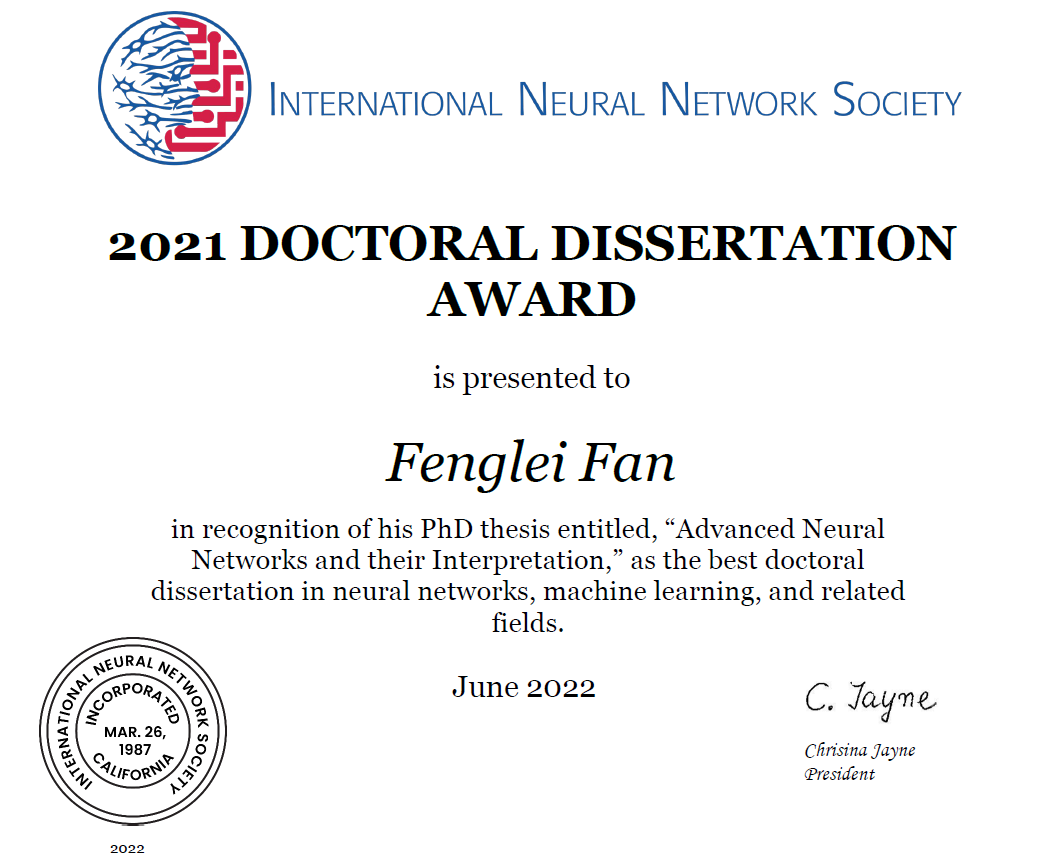

- June 06, 2022: I was honored to be selected as the award recipient for the 2021 International Neural Network Society Doctoral Dissertation Award.

- April, 2019: I am awarded an IBM AI Scholarship. IBM will support my research by covering my tuition and living expenses until graduation.

- 2016:Congxin Scholarship, Harbin Institute of Technology (awarded to only two undergraduates annually).

- 2014: Fujixerox Scholarship, Harbin Institute of Technology.

Talks and Presentations

- Invited Talk at School of Computer Science, Jiangxi University of Finance and Economics, Nanchang, China, Jan 16, 2026

- Invited Talk at School of Mathematics, UESTC, Chengdu, China, Dec 23, 2025

- Invited Talk at ISTBI, Fudan University, Shanghai, China, Dec 17, 2025

- Invited Talk at Institute for Brain and Intelligence, Fudan University, Dec 16, 2025

- Invited Talk at School of Information Engineering, Nanchang University (online), Nov 19, 2025

- Invited Talk at School of Biomedical Engineering, Hubei Polytechnic University (online), Nov 5, 2025

- Invited Talk at Chinese Information Processing Society of China (online), Oct 30, 2025

- Invited Talk at BESC 2025, Hong Kong, Oct 16, 2025

- Invited Talk at the Imaging Seminar in Tianyuan Mathematics International Exchange Center, Kunming, China, Oct 4, 2025

- Invited Talk at Huawei Global Storage Expert Forum, Suzhou, China, Sep 12, 2025

- Invited Talk at Department of ECE, Sultan Qaboos University, Sep 4, 2025 (Online)

- Invited Talk at Deep Learning Theory Workshop in Annual Meeting of Chinese Computational Mathematics Society, Changsha, Hunan, August 10, 2025

- Invited Talk at Deep Learning Workshop, RIKEN, Japan, August 7, 2025

- Invited Talk at School of Computer Science, Peking University, July 27, 2025

- Live Talk at "Academic Star" series talk, Huawei Strategic Institute, online, June 24, 2025

- Invited Talk at School of Life Science, Harbin Institute of Technology, June 16, 2025

- Invited Talk at Department of Theory, Huawei, online, June 5, 2025

- Invited Talk at Beijing Normal and Hong Kong Baptist University, Zhuhai, Guangdong, May 28, 2025

- Invited Talk at Jinan University, Guangzhou, Guangdong, May 27, 2025

- Invited Talk at the TongAI conference, Beijing, May 24, 2025

- Invited Talk at Zhejiang University of Finance and Economics, Hangzhou, Zhejiang, May 22, 2025

- Invited Talk at Hangzhou University of Electronic Science and Technology, Hangzhou, Zhejiang, May 21, 2025

- Invited Talk at UESTC, Chengdu, Sichuan, May 15, 2025

- Invited Talk at School of Computing and Data Science, Hong Kong U, April 7, 2025

- Invited Talk at IHEP (online), Beijing, March 6, 2025

- Invited Talk at Intelligent Imaging Branch of the Chinese Society for Stereology, Feb 27, 2025

- Invited Talk at Shenzhen Institute of Big Data, Shenzhen, Guangdong, Feb 17, 2025

- Invited Talk at Great Bay University, Dongguan, Guangdong, Jan 4, 2025

- Invited Talk at School of Mechatronics, Shenzhen University, Dec 26, 2024

- Invited Talk at the HKMS-HKSIAM Joint Young Scholars Symposium 2024, Dec 1, 2024

- Invited Talk at the Annual Meeting of Chinese Society for Stereology, Wuxi, China, Oct 27, 2024

- Invited Talk at Shanghai University of Electrical Power, Oct 17, 2024

- Invited Talk at Department of Data Science, City University of Hong Kong, Hong Kong, Sep 30, 2024

- Invited Talk at Workshop "AI for Science", Department of Mathematics, Hong Kong University of Science and Technology, Hong Kong, Sep 19, 2024

- Invited Talk at Hong Kong Baptist University, Hong Kong, Sep 10, 2024

- Invited Talk at *** Department of Consumer Equipment Manufacturing Technology, Dongguan, August 24, 2024

- Invited Talk at ***, Paris, France, July 25, 2024

- Invited Talk at SMU, April 12, 2024

- Invited Talk at FAI, December 9, 2023

- Invited Talk at Sichuan University, November 14, 2023

- Invited talk at CCF-EDA, Beijing, Oct 14, 2023

- Invited talk at BICMR, Peking University, May 23, 2023

- Invited talk at HIT Institute of Advanced Study in Mathematics, April 25, 2023

- Invited talk at Fuzhou University, Apr 1, 2023

- Tutorials on “Introducing Neuronal Diversity into Deep Learning” at AAAI2023 (AAAI is a top conference in the field of AI. Only approximately 20 tutorials are accepted annually.)

- Invited talk at SUSTech, Jan 12, 2023

- Invited talk at Northeastern University, Jan 8, 2023

- Invited talk at Fudan University, Dec 4, 2022.

- Invited talk at HIT Institute of Advanced Study in Mathematics, November 24, 2022

- Invited talk at National Biomedical Imaging Center, Peking University, October 27, 2022.

- Invited talk at IFMI & ISPEMI 2022, hosted by Chinese Academy of Engineering, August 10, 2022

- Invited talk at Summer School of Xiamen University, July 15, 2022.

- Invited talk at School of Math, Harbin Institute of Technology, April 2022.

- Invited talk at SCF-YSSEC, State Key Laboratory of Scientific and Engineering Computing, China, November 2021 (http://scf.cc.ac.cn/yssec2021/).

- Invited talk at FDA, May 2021.

- Invited job talk at Weill Cornell Medicine, Cornell University, January 2021.

- Invited job talk at Department of Mathematics, Duke University, December 2020.

- Poster presentation at fully3D 2019, Philadelphia, PA, June 2019.

Conference and Session Oragnizer

- The 14th AIMS Conference at NYU Abu Dhabi, Dec 16-20, Special Session 122 "Understanding the Learning of Deep Networks: Expressivity, Optimization, and Generalization" Shijun Zhang, Feng-Lei Fan and Juncai He

- HKSIAM Biennial Conference 2025, Eric Chung (Co-Chair) Gary Choi (CUHK) Fenglei FAN (CUHK) Kuang HUANG (CUHK) Bangti Jin (Co-Chair) Liu Liu (Communications Secretary) Ronald Lok Ming Lui (General Secretary) Xiaolu Tan (Communications Secretary) Tieyong Zeng (CUHK) Jun Zou (HKSIAM President)

Representative Work

- Wang G, Fan FL: Dimensionality and dynamics for next-generation artificial neural networks. Patterns (Cell subjournal), 2025 in press.

- Dong Z, Fan FL*, Liao W, Junchi Yan: Grounding and Enhancing Grid-based Models for Neural Fields, CVPR, 2024, in press (This paper gets full-graded review, CVPR 2024 Best Paper Award Candidate )

- Fan FL, Lai RJ, Wang G: Quasi-Equivalency of Width and Depth of Neural Networks. Journal of Machine Learning Research, 2023 in press (My PhD advisor Prof. Ge Wang listed this paper as one of his 16 representative papers among his 700+ publications )

- Zhang SQ, Wang F, and Fan FL*: Neural Network Gaussian Processes by Increasing Depth. IEEE Transactions on Neural Networks and Learning Systems, 2022 in press (IF=14.25).

- Fan FL, Cong W, and Wang G: A new type of neurons for machine learning. Int. J. for Number. Method. in Biomed. Eng., 34.2, e2920, 2018 (the first paper on introducing neuronal diversity into deep learning).

Funding

- PI, Concrete Segmentation and Gradation Analysis, Guangxi Road&Bridge Company of Guangxi Beibu Gulf Investment Group Co., Ltd. (China Top 500), 150,000 RMB, June 2023 – June 2024 (Finished).

- PI, Engineering of Great Mathematical Theorems, Research Contract, ****, 750,000 HKD, June 1, 2024 – Feb 28, 2025 (Finished).

- PI, **** Gifted Fund, 990,000 HKD, Apr 1, 2024 – Apr 1, 2026.

- PI, Density Trajectory in Model Compression, Research Contract, ****, 3,540,000 HKD, June 22, 2025 – June21, 2027.

- PI, Geometric Deep Learning, Gift Fund for Hiring Postdocs, ****, 990,000 HKD, June 22, 2025 – June21, 2027.

- PI, Develop Optimized Algorithms for On-device Intelligent Speech Recognition, Hong Kong ASTRI, 320,000 HKD, Oct 10, 2025 – Oct 9, 2026.

Teaching

- MATH3320: Data Analytics

- MATH6251: Topics in Mathematical Data Science

- Sep 15, 2025. Chat with Prof. Hanchuan Peng!